Interpretable machine learning: Explaining Change

Fabian Fumagalli, Barbara Hammer

Duration: 2021 - 2025

Funding: Deutsche Forschungsgemeinschaft (DFG, German Research Foundation): TRR 318/1 2021 – 438445824

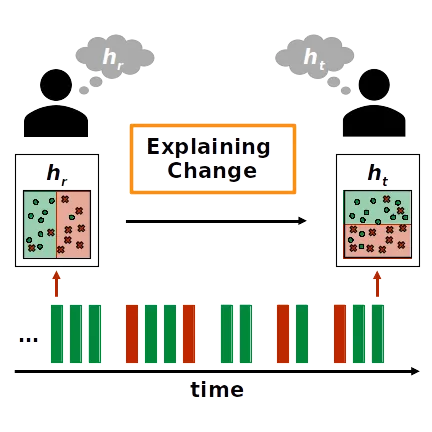

Today, machine learning is commonly used in dynamic environments such as social networks, logistics, transportation, retail, finance, and healthcare, where new data is continuously being generated. In order to respond to possible changes in the underlying processes and to ensure that the models that have been learned continue to function reliably, they must be adapted on a continuous basis. These changes, like the model itself, should be kept transparent by providing clear explanations for users. For this, application-specific needs must be taken into account. The researchers working on Project C03 in the TRR318 are considering how and why different types of models change from a theoretical-mathematical perspective. Their goal is to develop algorithms that efficiently and reliably detect changes in models and provide intuitive explanations to users.